Visual Design of Robot-Assisted Surgery for the Hearing Impaired

Catherine Tsai, 2019

How can designing audience-specific visualizations improve the user experience of a novel robot-assisted surgical platform? What visual aspects are important to improve accuracy, orientation, and safety for the surgeon user? What visual aspects are important to reduce fear and improve trust for patients about to undergo the surgery?

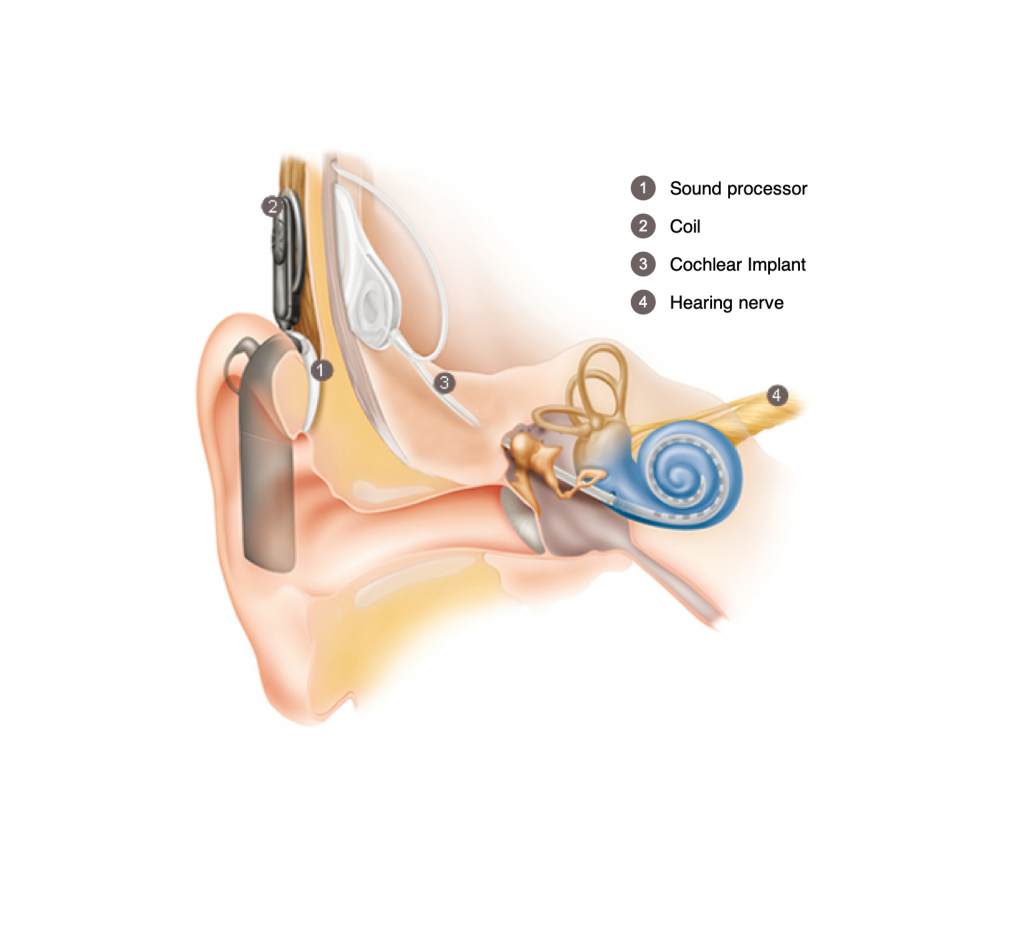

What is a cochlear implant?

A sound processor (1) captures sound and converts it into a digital code. The code is sent through a coil (2) to the implant (3). The implant sends an electrical signal through an electrode that must travel through the side of the skull to reach the cochlea, our hearing organ. The hearing nerve (4) transmits the signal as impulses to the brain, which interprets these as sound.

Picture from Cochlear®.

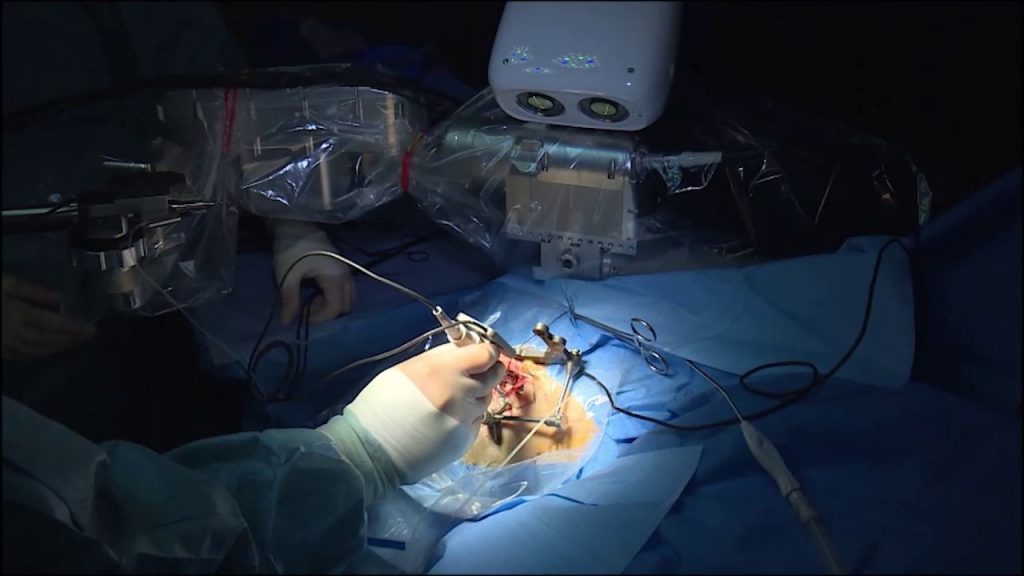

A robot assists a surgeon

A robot with an optical tracker and drill assists a head and neck surgeon with difficult parts of cochlear implantation surgery.

Picture from ARTORG Center.

Background

Five percent of the world is deaf or hard of hearing. The cochlea is the organ that allows us to hear, and thousands of cochlear implantations are performed each year to restore the sense of hearing. Because ear anatomy is difficult and the surgery is technically challenging, 30–50% of cases are not successful. With modern technology, surgeons like myself now have a solution – surgical robots. Robots can overcome human limitation, making the surgery more effective, less invasive, and safer for the patient.

Aims

Programming the surgical robot requires creating 3D models from patient-specific CT scans. The aim of this project was to explore the visual needs of the various audiences of this novel surgical system.

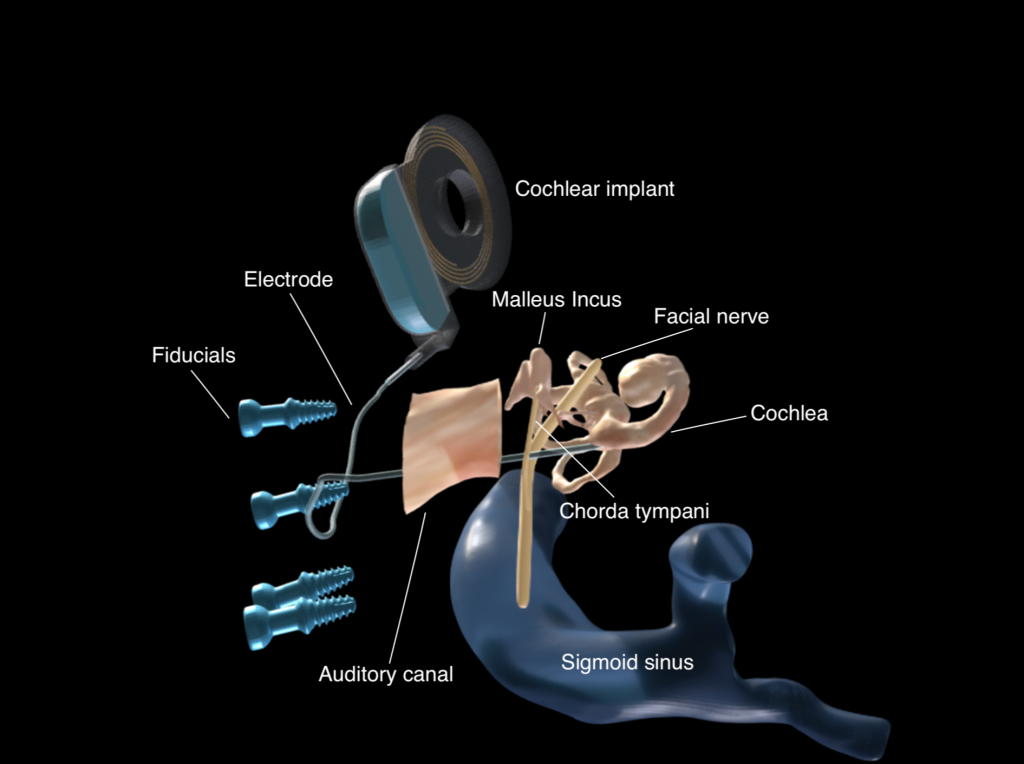

From Pixels to 3D Models

Patient-specific 3D ear and skull models are generated from the pixels of a computed tomography (CT) scan using Amira software.

Using Cinema 4D, various visual themes are created. Models are shared using Sketchfab to test the ability of the visualizations to improve accuracy of identifying structures and ability to orient structures.

After the final designs are created, engineers at ARTORG Center program them into Otoplan.

Methods

After using Amira to generate 3D models from CT scans, I then used Cinema 4D to experiment with various visual styles. In collaboration with engineers and surgeons, I conducted focus groups, in-person interviews, and surveys to test the surgeon user experience of lifelike compared to the baseline unnaturally-colored visualizations. Quantitative studies were performed test the effect of lifelike visualizations on ability to orient and identify anatomical structures. Interviews and surveys were used to explore how best to use visualizations to guide the patient experience of undergoing robot-assisted surgery.

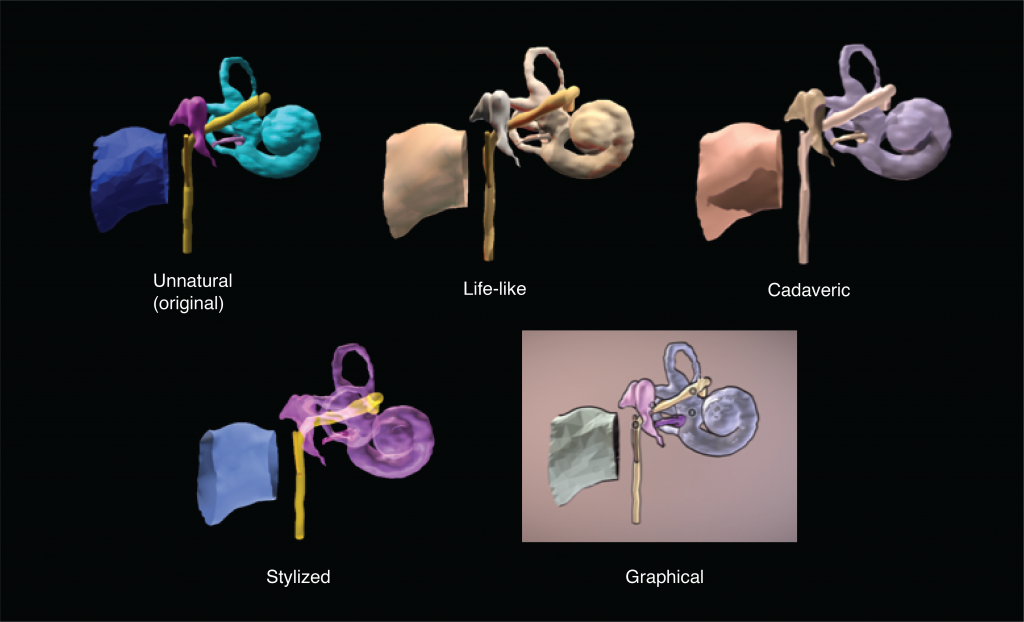

Testing various visual styles

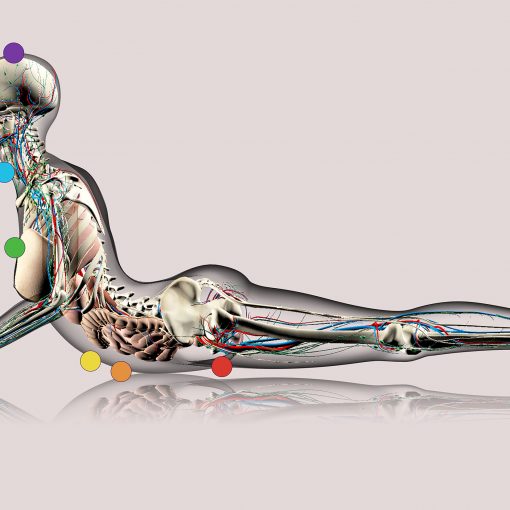

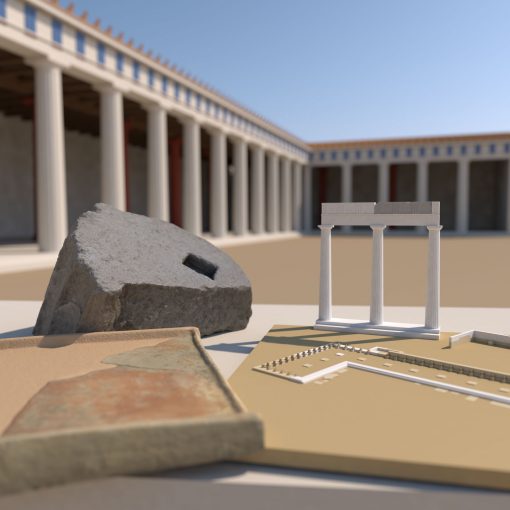

After a web-based search on various visual styles commonly used to portray 3D-generated anatomy from CT scans, I created 5 visual styles of my ear models to test with surgeons: unnaturally-colored, life-like, cadaveric, and stylized. The visual styes were created in Cinema 4D and uploaded to Sketchfab for sharing. Visual preferences were qualitatively tested in focus groups and interviews.

Results and Conclusions

Lifelike visualizations improve the user experience for surgeons regarding accuracy, orientation, and satisfaction, while graphical visualizations can enhance patient understanding and trust in a robot-assisted surgical system.

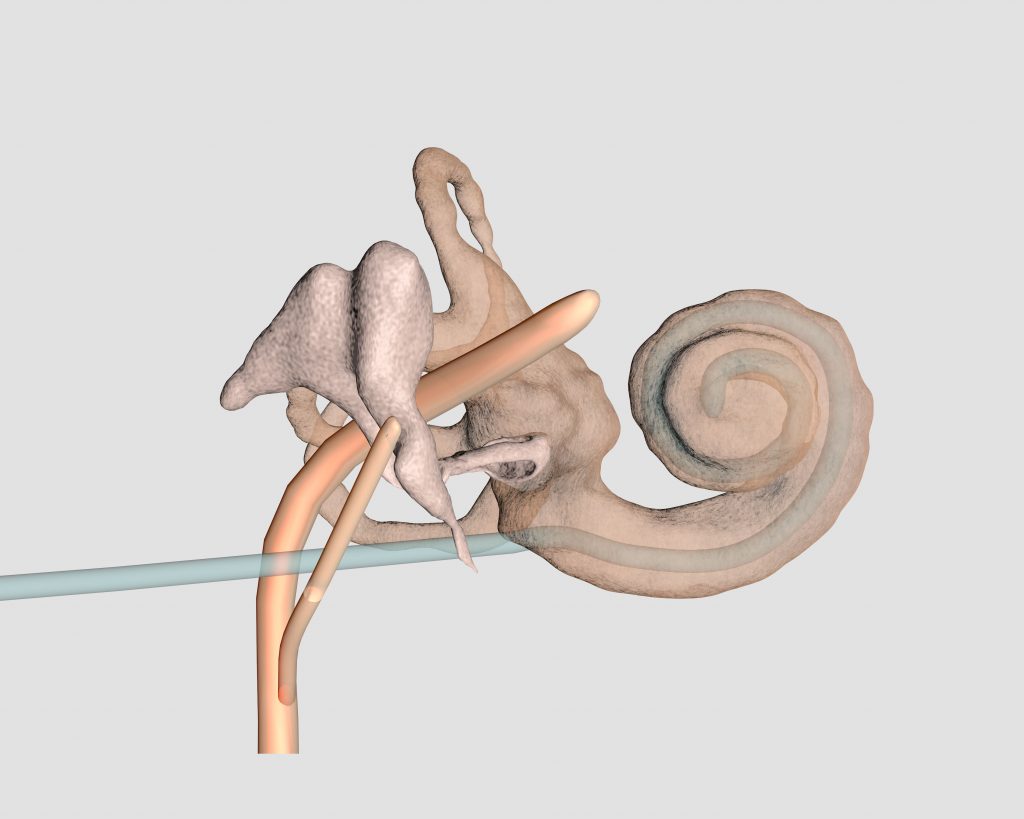

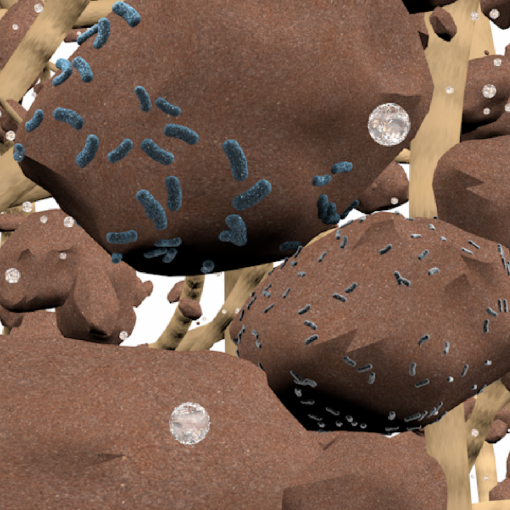

Life-like 3D reconstruction of the cochlea, malleus, incus, stapes, and facial nerves from a patient‘s CT scan. Transparency allows us to see the cochlear implant electrode threading into and coiling within the cochlea.

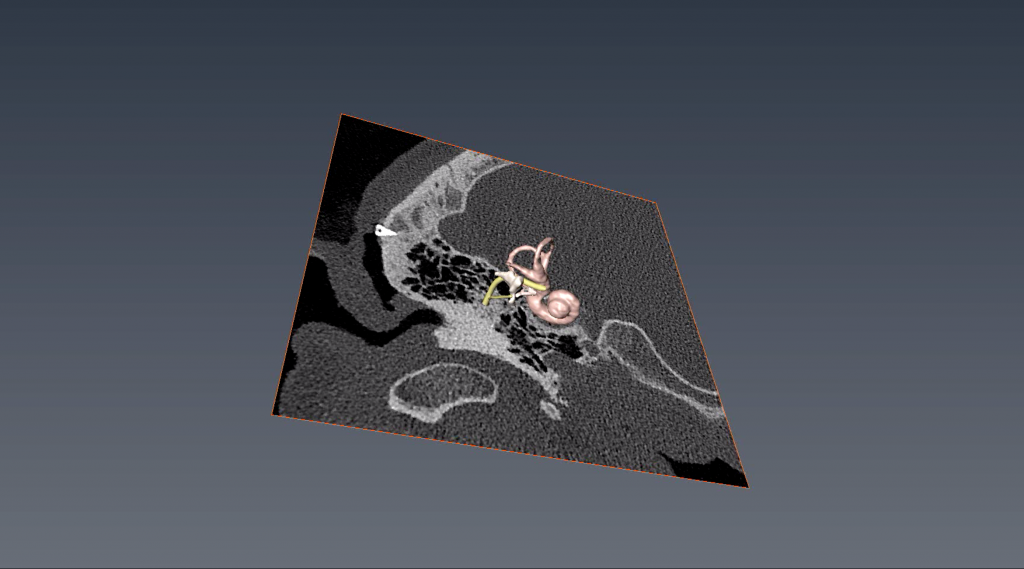

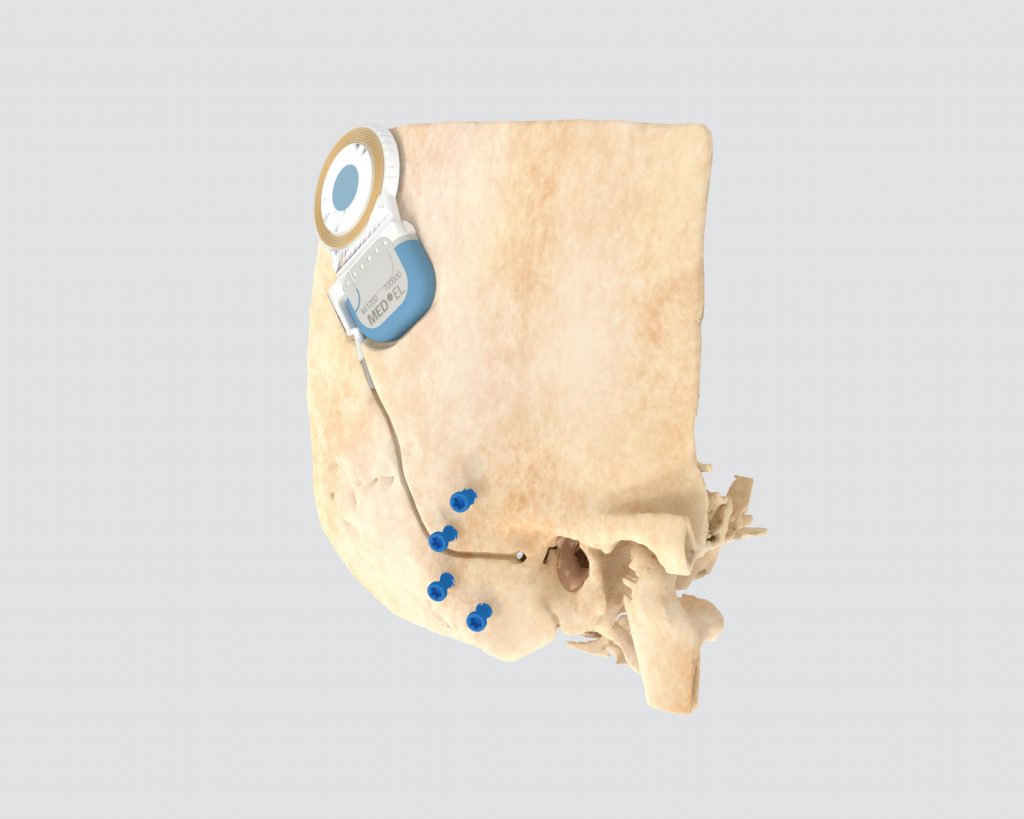

Life-like 3D reconstruction of a patient‘s skull with cochlear implant and blue fiducials (screws). Fiducials allow the robot to capture the movement of the patient‘s head through optical tracking.

Master project 2018–2019

Mentoring

Fabienne Boldt

Simon Schachtli

Cooperation partners

ARTORG Center for Biomedical Engineering at the University of Bern

Inselspital, University Hospital of Bern

Mentor: Stefan Weber

Contact

Email Catherine Tsai for more information: catherine.tsai@zhdk.ch