Friendly Fire is an interactive hommage to Joseph Weizenbaum’s psychotherapy simulation «Eliza» (1966), the world’s first computer chatbot. Weizenbaum was shocked by how quickly and deeply users became emotionally involved with Eliza – and how unequivocally they treated it as if it was human. As a German-born Jewish scientist who fled the Nazis, Weizenbaum worried about dehumanization. He argued that certain human activities – like interpersonal relations and decision-making – should remain inherently human, and that the uncritical adoption of AI could erode whole aspects of human life.

Weizenbaum died in 2008. In 2022, the chatbot application «ChatGPT» was released and set a record for the fastest growing software ever, reaching 100 million users in just two

months. «Friendly Fire» uses ChatGPT for a creative re-imagination of Weizenbaum’s work, exploring the fears and desires that artificial «intelligence» triggers in us.

Credits:

Manuel Flurin Hendry (Artistic Direction, Production)

Norbert Kottmann (Programming)

Paulina Zybinska (Programming)

Meredith Thomas (Programming)

Florian Bruggisser (Programming)

Linus Jacobson (Scenography)

Marco Quandt (Light Design)

Domenico Ferrari (Audio Design)

Martin Fröhlich (Projection Mapping)

Stella Speziali (Scanning and Motion Capture)

Hans-Joachim Neubauer, Anton Rey, Christopher Salter (Project Supervision)

Supported by Migros Kulturprozent, Speech Graphics

Cooperation by Immersive Arts Space, Institute for the Performing Arts and Film,

ZHdK Film

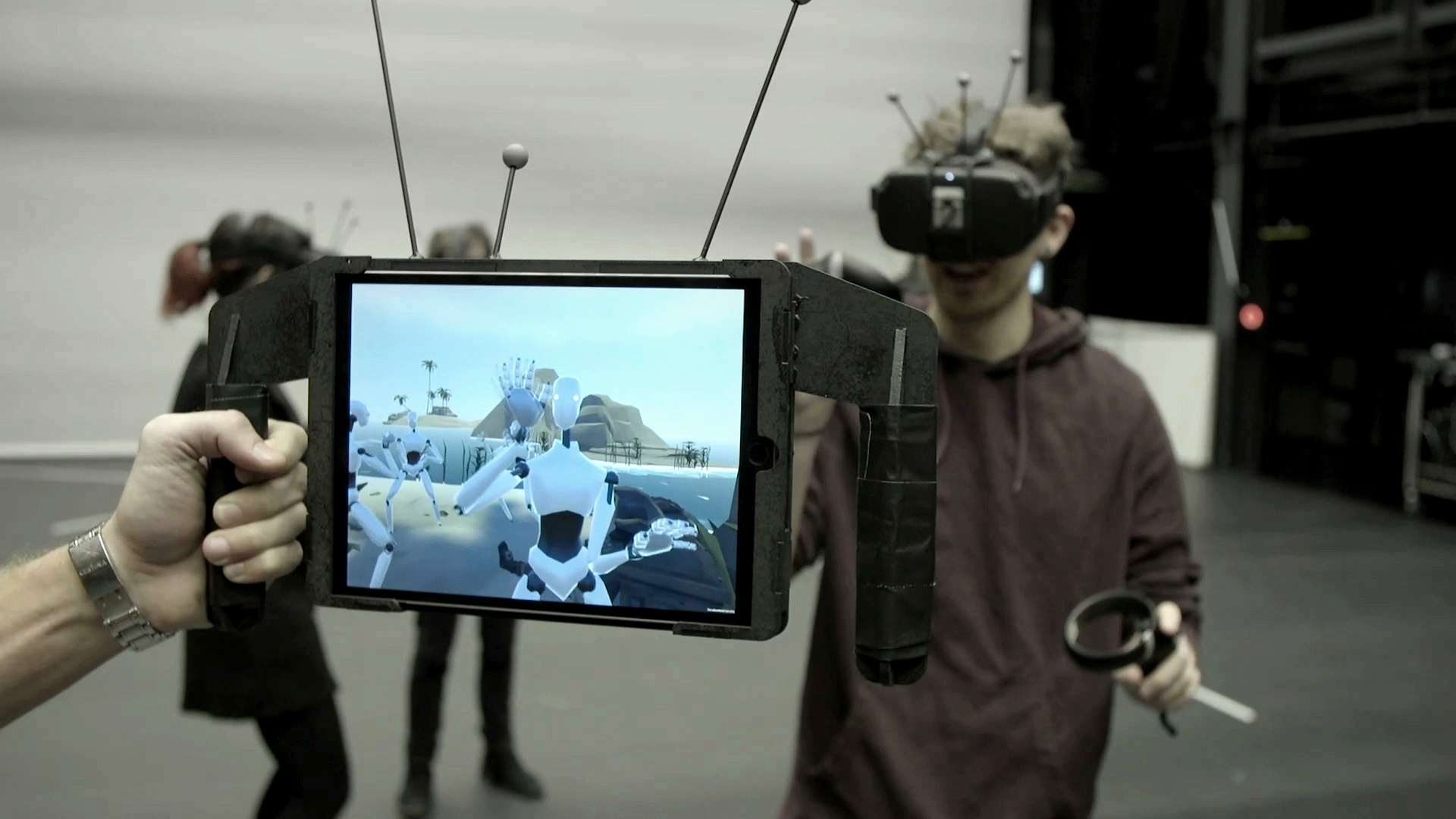

Friendly Fire was developed out of the artistic-scientific project The Feeling Machine, in which Manuel Flurin Hendry explored the question of artificial emotionality. “The Feeling Machine” is the PhD thesis of Manuel Hendry, which is part of the PhD program of the Filmuniversität Babelsberg Konrad Wolf and the ZHdK. Since 2022 the project is developed artistically-technologically in the Immersive Arts Space.

Find more information and by now six conversations of Stanley with different people here

With the help of the so-called pre-trained Large Language Model (LLM), a speech algorithm, the linguistic simulation was developed. In months of development within the Immersive Arts Space and with the help of our scientific collaborators Norbert Kottmann, Martin Fröhlich and Valentin Huber, Stanley was born. In the early stagesa physical mask was developed with which the audience can converse directly in oral language in a museum-like situation. This being will be programmed as Unreal MetaHuman with the Omniverse Avatar Cloud Engine (ACE). Its physical form will be generated with

projection mapping.

Stanley and The Feeling Machine were part of the exhibition “Hallo, Tod! Festival 2023” and on display for a chat in the cemetary Sihlfeld in Zurich.

Read more about it in an article by the Tagesanzeiger