As a hub for research-creation, the Immersive Arts Space tackles a multifaceted challenge by pursuing and promoting an integrated program of transdisciplinary inquiry in and across research, teaching, service and production. The team members have their professional roots in film, game design, interaction design, music, computer science and engineering, and often members have a background in more than one discipline. The IASpace is part of the research cluster of the Digitalization Initiative of the Zurich Higher Education Institutions (DIZH).

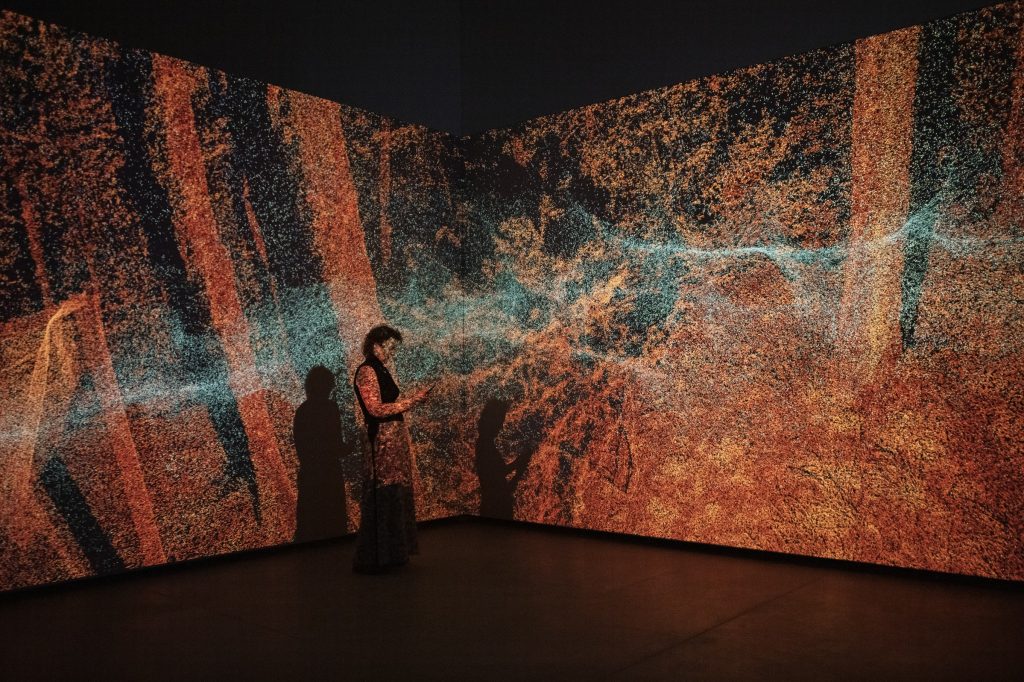

Immersion Studies for Climate Experiences (2025-2028)

Supported by SNSF, Practice to Science

Rasa Smite, PI (Principal Investigator)

This project explores how complex climate data can be transformed into immersive experiences that enhance public understanding and engagement. By combining artistic experimentation with scientific data, it aims to develop innovative methods for making climate change more accessible—turning abstract environmental shifts into emotional, tangible experiences.

The research focuses on three key questions: (1) How can complex climate science data be transformed into sensory, immersive experiences that help us imagine and understand the invisible processes in nature and society affected by climate change? (2) What kinds of collaborations and experimental tools between art and science are needed to make this transformation possible? (3) How can the effects of such immersive experiences on public awareness and emotional engagement with climate issues be studied?

The three-year project will involve fieldwork and the translation of collected data into immersive (XR) environments, which will be exhibited and analyzed in public venues. Reflexive and multimodal ethnography will be used to examine the scientific research process, artistic experimentation, and how audiences respond to and engage with immersive environments—co-creating new ways of sensing and communicating environmental change.

Hosted by the Immersive Arts Space the project will result in workshops, exhibitions, video interviews, and publications. It will contribute new knowledge at the intersection of climate-related arts and sciences and offer an original, critical contribution to interdisciplinary research and education on climate and environmental issues—within ZHdK, across Switzerland, and internationally.

Probing XR’s Futures. Design Fiction, Bodily Experience, Critical Inquiry

Swiss National Science Foundation (SNSF) project, 2023-2027

“Extended reality (XR) devices like Apple’s recently announced Vision Pro or Meta’s Oculus Quest 3 enable new possibilities for mixing the real world with a computationally generated one, promising to “change interaction as we know it.” Yet, there is little research on exactly how XR might reshape bodily subjectivity and experience. Probing XR’s Futures utilizes a critically-historically informed, practice-based design approach to examines how XR technologies reimagine bodily subjectivity, interaction and experience, on the one hand, and how bodily experience could reimagine XR, on the other. The 4-year project employs critical, creative, conceptual and empirical approaches to address three questions: How is everyday interaction in XR achieved? How will XR change interaction and what social reciprocity and mutual access will be enabled? What concrete effects and forms of discipline will be enacted on disabled bodies interacting in XR? The objective is to use design fiction, a design research method that prototypes objects and scenarios to provoke new ways of thinking about the future, as a form of critical inquiry to probe the present and future of social interaction in XR in three different settings and contexts: the lab, public space and in collaboration with disabled researchers and communities. Situated at the Immersive Arts Space at the Zurich University of the Arts, the project is at the interdisciplinary intersection of Critical VR studies, Science and Technology Studies (STS) and experimental media design. It will constitute one of the first in the context of Swiss and German speaking design research to develop alternative thinking and experimental aesthetic-design analysis, reflection and critique of XR directly in situated action and use with the general public.

Team:

Christopher Salter (Project Lead)

Philippe Sormani (Senior Researcher)

Puneet Jain (PhD Candidate)

Chris Elvis Leisi (Researcher)

Oliver Sahli (Researcher)

Stella Speziali (Researcher)

Pascal Lund-Jensen (Researcher)

Project Partners:

Andreas Uebelbacher (Access for All Foundation)

John David Howes (Concordia University Montreal, Sociology/Anthropology)

Sabine Himmelsbach (Haus der elektronischen Künste Basel, HeK)

Pilar Orero (Universitat Autònoma de Barcelona, Transmedia Research Group)

Lorenza Mondada (Universität Basel, Institut für Französische Sprach- und Literaturwissenschaft)

SNF-project: Performing Artificial Intelligence

Swiss National Science Fund (SNSF) project, 2025-2029

Performing AI’s goal is to contextualize AI as a dynamic social and cultural artifact that is discursively and practically constituted (that is, performed) in specific contexts and situations. In other words, what does “AI” do, why and how does it do what it does, and what effects does it produce across different disciplines? The project takes the theoretical and conceptual lenses of performance and performativity for navigating AI’s messy entanglements between the social and political, technical and aesthetic.

The project has three core objectives: 1) understand how AI is performed differently in its multiple constitutions (discursive, material, situated) and in/across disciplines; 2) provide interdisciplinary research and training opportunities for a next generation of researchers to grapple with the complex, multi-scalar nature of AI; and (3) explore new forms of critical public engagement with AI across arts, science, policy and technology.

Performing AI will thus study AI’s performances in the making in three sites – the policy space, experimental scientific and artistic research labs, and otherwise mundane spaces. Examining AI in the making, the project explores how AI is discursively enacted in policy and governance and examines the material agency of AI in robotics, artificial life and digital arts where human actors have to interact with machinic systems in real time. It also draws upon and develops ethnographic and ethnomethodological approaches to trace the situated action and production of AI in public settings of the everyday including a museum as well as in hybrid art, science and technology laboratories.

Project Partners:

Anna Jobin (University of Friobourg)

Olivier Glassey (University of Lausanne)

Takashi Ikegami (University of Tokyo)

Christopher Salter (Zurich University of the Arts)