On this page you can find current and upcoming projects. We develop and host a variety of projects, such as graduation student projects, internal research projects and collaborations with external partners.

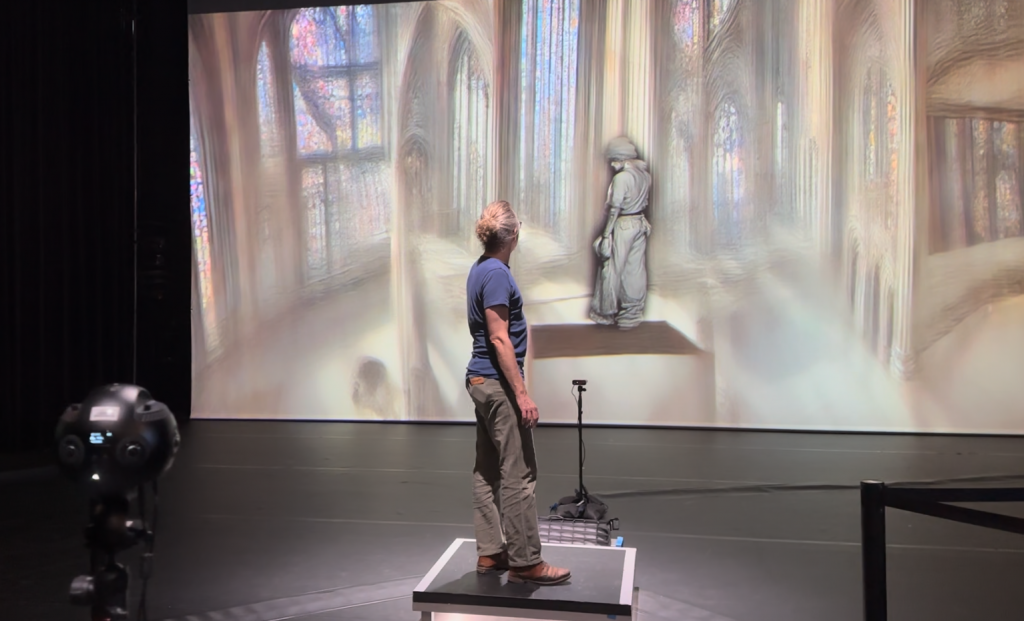

Hero’s Return

The interactive installation A Hero’s Return transforms visitors’ poses into a digitally generated alter ego. The creation of the character and its background is realized in real-time by artificial intelligence models. This installation prototype explores the capabilities of these models (such as Stable Diffusion) and focuses on the technical and aesthetic possibilities in combination with the performative interaction with visitors. The installation also incorporates popular film representation techniques: a virtual camera performs a circular tracking shot around the scanned visitor’s body, reminiscent of scenes from numerous action hero films.

Credits:

Artists: Martin Fröhlich, Stella Speziali

Thanks to: Ege Seçgin

doppelgaenger:apparatus

doppelgaenger:apparatus is a multiuser mixed reality experience that enables participants to confront their computer generated 3D doubles in a real physical surrounding. Enabled by a custom designed AI-based process, a frontal 2D image of the participants is taken and quickly transformed into an animated 3D reconstruction. From the moment the visitors put on the headset, they see the real environment captured by a camera on the headset. Gradually, this familiar space shfits, with the appearance of the visitors’ double standing before them. Co-present with other participants, visitors intimately interact with their ‘mirror image’ while observing and interacting with others doing the same. While at first the participants get the feeling of controlling their ‘mirror image’, this rapidly shifts as their doubles, free from the constraints of the original bodies, start to invade the participants’ personal space, leading to a disturbing and uncanny play between them. Historically, a doppelgänger was seen as a ghostly counterpart for a living person, seen as an omen or sign of death. Freud later argued that encountering one’s double produces an experience of “doubling, dividing and interchanging the self.” This installation thus takes the next step in exploring how new digital sytems increasingly produce uncanny tensions between our bodies and their captured other.

Credits:

Artist and Development: Chris Elvis Leisi

Artist and Sound: Christopher Lloyd Salter

Machine Learning: Florian Bruggisser

Past exhibitions:

MESH Festival, 16. to 20. October 2024 at HEK in Basel. More information [here]

REFRESH x FANTOCHE, 04.-08. September 2024

BODIES-MACHINES-PUBLICS

A glocal network exploring bodies in the age of computer mediated reality

BODIES-MACHINES-PUBLICS brings together a “glocal” (global and local) network of cultural and research partners in Switzerland, India and Chile that seek to provide time and space for artists to develop prototypes and works exploring the sensitive body and technical mediation in relation to public space. The project is a two-year collaborative initiative between NAVE (Chile), KHOJ Studios (India), Immersive Arts Space/ZHdK (Zurich, Switzerland) and Kornhausforum (Bern, Switzerland) and is supported by the Pro Helvetia Synergies Program.

People increasingly interact with technologies such as wearable sensors, VR/AR headsets or other data gathering systems on an intimate, bodily level which successfully blurs the lines between the physical and the digital; the body and its interaction with its environment. At the same time, the involuntary data collection and machine-led decisions that arise from these sensing technologies exacerbates historical inequalities, particularly affecting marginalized groups. Addressing the capture of human motion, thoughts and experience through new technologies is therefore an ongoing challenge that requires new kinds of creative and imaginative practices.

Residency 2025

Open Call for Residency at Immersive Arts Space and Kornhausforum

BODIES-MACHINES-PUBLICS invites Indian and Swiss visual, performing and media artists, designers, architects or researchers working between art, technology and science to apply for a shared residency at the Immersive Arts Space and Kornhausforum Bern in Switzerland. Artists can apply through an open call until 13th April 2025. The residency will last four weeks, two weeks in each institution (Immersive Arts Space and Kornhausforum) between 8th September to 5th October 2025. The aim of the residency is to develop prototypes and works that can be publicly exhibited.

The deadline for application has ended.

Open Call for Residency at NAVE

Residency 2024

The project starts this year with the combined residencies at the Immersive Arts Space and Kornhausforum. The two part residency starts on 9th September at the IAS and moves then into the Kornhausforum until 5th October 2024, where there will be a public presentation of the projects. Two artists – one from Switzerland, and another one from Chile – will share the spaces and work on their projects with the aim to exchange artistically as well as culturally.

The Swiss artist is Ludovica Galleani d`Agliano, who graduated in 2023 from Interaction Design (ZHdK) with her project Syntia_CAM. In the month long residency she aims on developing Syntia_CAM into a live performance and therefore interactive installation between the main characters and audiences.

Javier Muñoz Bravo is a composer within the field of instrumental and electronic music. Javier was trained at The University of Chile (Santiago) and Institut de recherche et coordination acoustique/musique (Ircam) in Paris. Today, Javier is based in Paris and works together with choreographer Stefanie Inhelder and the cie glitch company creating immersive dance performances. During the residency the duo will develop Une saison en métavers – an immersive piece, with a female singer in combination with AI driven visuals, motion capture and live electronics.

https://javiermunozbravo.com/

Zangezi

Zangezi is an ongoing artistic project developed by the Immersive Arts Space (artistic lead Chris Salter). The play has been presented and performed at various locations to date. Accordingly, the format and scope have changed and evolved.

Background

Zangezi is a Russian Cubo-Futurist poem/play written by the poet Velimir Khlebnikov 101 years ago in 1922. The story revolves around Zangezi, a prophet who speaks in the language ZAUM, a Russian word that is translated as “beyondsense.” Zangezi speaks with and can understand the birds, the gods, the stars and speaks in those languages as well as in poetic and also what K. called “ordinary” language. The Immersive Arts Space is working on this text because as a futurist work, Zangezi deals with is a fundamental human question which is increasingly becoming a problem for machines – what is the basis of language? Is language only about meaning based on syntax? Is it about predictable sequences? Rules and probabilities? Or is there something else going on that is universal and cosmic about language not as words and their meanings but as an act of sound?

Indeed, Khlebnikov, who had a mystical belief in the power of words, thought that the connections between sounds and meaning were lost during mankind’s history, and it was up to those in the future to rediscover them. 101 years later we ask how we might be able to approach Zangezi’s operations on multiple levels – cosmological, political, historical, technological – in a moment where we are increasingly surrounded by machines that produce something that appears like human language but in which there is no speaker, no body and no sound.

Zangezi – Performance

Under the artistic direction of Chris Salter (media artist and head of Immersive Arts Space), and in collaboration with the Immersive Arts Space (ZHdK) and an international team of artists from music, visual arts, performance, technology, and artificial intelligence, an immersive performance is being developed. The production combines spatial sound, lighting, music, graphics, and real-time AI-driven scenography generated with a game engine, alongside physical performance. A large-format LED wall serves as a central visual and lighting element. Digital landscapes, characters, and soundscapes are generated live and interact with the performers on stage. “Zangezi” addresses fundamental questions of the present: the relationship between humans and nature, the role of language, and war and conflict as driving forces of historical change. Following its premiere in Weimar, an international tour is in preparation.

World premiere will be at the Kunstfest Weimar 05. & 06.09.2025

Ticket are available [here]

Credits:

Chris Salter (Artistic direction)

Remco Schuurbiers (Artistic collaboration)

Sébastien Schiesser (Light)

Pascal Lund-Jensen (Sound)

Erik Adigard (Graphic design)

Timothy Thomasson (Virtual environments)

Gonçalo Guiomar (AI-Systems)

Ramona Mosse, Judith Rosmair (Dramaturgy)

Audrey Chen, Judith Rosmair (Performer)

Simon Lupfer (Technical coordination)

Miria Wurm (Production management)

Dietmar Lupfer (Producer)

With artistic contributions from Stefanie Egedy (BR), The Future of Dance Company (NG), Immersive Arts Space / ZHdK (CH), kyoka (JP), Raqs Media Collective (IN), Team Rolfes (USA), Pierre-Luc Senecal / Growlers Choir (QC/CA), Rully Shabara (ID), Stella Speziali (CH), Fernando Velasquez (BR)

A production by Muffatwerk Munich in co-production with Kunstfest Weimar, Immersive Arts Space / ZHdK Zurich, with support from XR Hub Bavaria.

Funded by the German Federal Cultural Foundation. Funded by the Federal Government Commissioner for Culture and the Media.

Zangezi – A Film Installation

Zangezi was part of the Zurich Art Weekend in June 2025. For this event, the piece was further developed into a short film. It was shown on the state of the art LED wall in the Film Studio of ZHdK.

Zurich Art Weekend took place from 13th to 15th June.

Zangezi Experiments

Zangezi-Experiments, a multimedia stage play, was performed at the MESH Festival. Before, fragments of the piece were recreated as a work in progress for the REFRESH#5 2023 festival, that took the form of a theatrically staged reading within the technical machine of the Immersive Arts Space itself.

Credits:

Chris Salter (Direction, Sound Design)

Corinne Soland (performer)

Luana Volet (performer)

Antonia Meier (performer)

Stella Speziali (Development Meta Human)

Valentin Huber (Visual Design/Unreal Engine)

Pascal Lund-Jensen (Sound Design), formerly Eric Larrieux

Sébastien Schiesser (Light Design, show control)

Ania Nova (Russian voice over)

NEVO | Neural Volumetric Capture

NEVO is a processing pipeline that combines multiple machine learning models to convert a two-dimensional images of a human into a volumetric animation. With the help of a database containing numerous 3D-models of humans, the algorithm is able to create sketchy virtual humans in 3D. The conversion is fully automatic and can be used to efficiently create sequences of three-dimensional digital humans.

Group members: Florian Bruggisser (lead), Chris Elvis Leisi. Projects: Shifting Realities Associated events: REFRESH X FANTOCHE, LAB Insights

cineDesk

cineDesk is a versatile previsualization tool that provides real-time simulations for the development of film scenes, VR experiences and games in 3D spaces. In the preproduction process of films, cineDesk primarily supports scene blocking and lighting previsualization. Directors, cinematographers and production designers can collaboratively explore how space, props and acting, are translated into cinematic sequences. The positions and movements of the virtual actors and the virtual camera in the 3D space are rendered in real time into 2D videos with the help of the Unreal game engine. This allows staging and visualization ideas to be explored interactively as a team. And, by means of virtual reality goggles, the virtual 3D space can be entered and experienced directly.

cineDesk, is a further development of the Previs Table, which goes back to cooperation with Stockholm University of the Arts. The actual research focuses on advanced features and multiple applications (e.g. for gaming, stage design, architecture etc.)

Developers: Norbert Kottman, Valentin Huber, videos: cineDesk, Scene-Blocking, research field : Virtual Production

> cineDesk has its own website. Please visit www.cinedesk.ch